Posted: May 14th, 2014 | Author: khosrow | No Comments »

Back in February I added functionality to bring keepalived support to lvsm. As a result, I’ve achieved the first major goal of the program by supporting the two main directors available for LVS.

There are still a few bugs to fix before this release (0.5.0) becomes stable. But if you’d like to give it a try, please head to the release page and download a copy.

Posted: November 3rd, 2013 | Author: khosrow | Tags: postfix, python | No Comments »

Recently I re-did the code for track_msg. In doing so, I added a new option to search using message-id. The new version is available as a fancy new github release here.

And as usual details on how to get the latest source code and other information are available on the project page.

Posted: October 27th, 2012 | Author: khosrow | Tags: python, setuptools | No Comments »

Over the past month I’ve been working on a python project and I ended up learning about python packaging. Using these standard tools is a very useful way to distribute projects since they can be easily built by anyone or converted to standard packages such as .deb or .rpm.

I’ve now re-organized the code in track_msg so that it can be built and installed using standard python tools like pip. Build and install instructions can be found on the project page.

Posted: September 3rd, 2012 | Author: khosrow | Tags: email, postfix, python | No Comments »

I had initially written track_msg using python’s re module, but after some tests on fairly large logs (~250Mb) I realized that using inline functions like python’s string module was much more efficient the script ran reasonably fast on larger files.

I think there’s still room for improvements but I’m going to move on for now, and see if there are any bugs that I’ll run into during daily use.

The latest release is available here

Posted: August 2nd, 2012 | Author: khosrow | Tags: email, postfix, python | No Comments »

A while back I had to do a lot of searching through postfix log files at work. I got tired of having to first, find the original email based on the to and from information, then searching the logs using the various queue id’s as the emails got queued by the mail daemon.

So, I wrote a python script that combined the process and it did the job and saved me a good amount of time. I then got carried away and added color to the output, and got a bit fancy. So I decided to make the script public. It is still not optimal and processing large files ( > 2Gb ) takes longer than I’d like. Hopefully in future iterations I’ll improve the performance.

More info is available on the track_msg page.

Posted: December 20th, 2011 | Author: khosrow | Tags: appspot, flickr, python, twitter | Comments Off on End of the line for t2f

Last week, twitter released a new version of their iPhone app and with it removed the option to use a custom image uploader. This means the end of the line for my t2f service, and I now have to use the more traditional way of posting from Flickr to twitter.

So this ends development or any improvements to t2f unless I find a new use for it.

Posted: March 29th, 2011 | Author: khosrow | Tags: kde, python, qt, todotxt | No Comments »

In an attempt to learn some of the KDE API and work on a todo list that I like, I’ve written a widget to view a todo.txt file and display it in a plasmoid. The initial code is very basic and even does somethings wrong (I think), but it’s a start. I’m using it daily at work and when I get a chance I’m improving on it.

For download links go to the project page.

Posted: February 4th, 2011 | Author: khosrow | Tags: appengine, python, twitter | No Comments »

I’ve made a little page for the t2f project. It’s a photo upload service that can be used with the Twitter app on iOS, and any other apps that allow custom image upload services. I was hoping the android version would support custom services, but it doesn’t and considering they were written by different people it’s no surprise.

It was just an excuse for me to practice on the Google App Engine, and it’s not ready for public use. I’ll have to clean it up a bit so I can at least publish the code.

Posted: October 29th, 2009 | Author: khosrow | Tags: internet, kubuntu, linux, Technology, Web 2.0, work | 2 Comments »

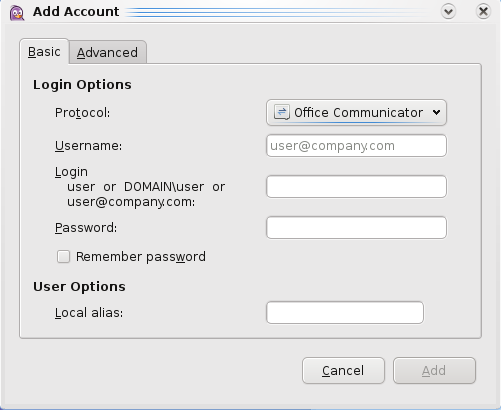

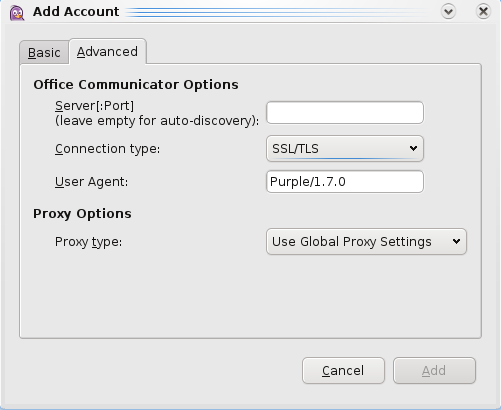

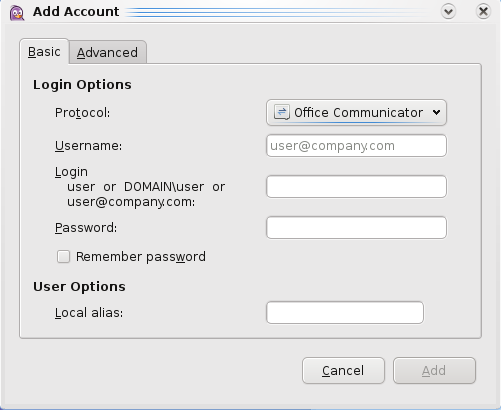

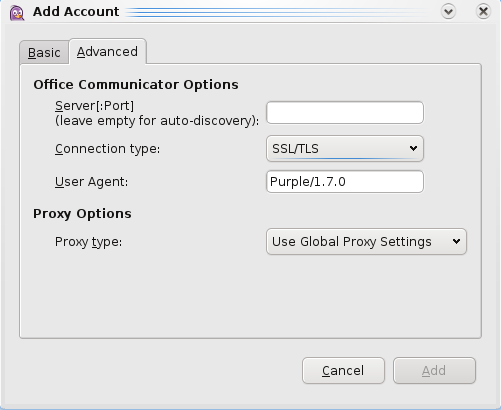

As with most things in tech, large companies catch on to the power of instant messaging late. Where I work is no exception. They rolled out Microsoft Office Communicator a couple of months ago (as a side note, that is a horrible landing page) and made much of the new and improved power of communication between employees. It’s a great thing that I can finally communicate using instant messaging, but the proprietary software threw a monkey in my desktop setup. After some research I found out how to get my desktop to connect to the Office Communicator server: Pidgin and SIPE.

First, I needed to install Pidgin

sudo apt-get install pidgin

Then, I installed the TLS plugin for Pidgin

sudo apt-get install pidgin-encryption

Now, the important piece of the puzzle was SIPE, which is needed to connect to proprietary server. I initially tried the usual

sudo apt-get install pidgin-sipe

But the version of SIPE available for jaunty was version 1.2-1 and it didn’t work. So, I went with the old school way of compiling my own binary. I got the code from here and followed the simple instructions on the same site. They are as follows:

tar -zxvf pidgin-sipe-1.7.0.tar.gz

cd pidgin-sipe-1.7.0

./configure --prefix=/usr/

make

sudo make install

Once installed, I started up Pidgin and after entering the necessary info connected successfully. You can see the detailed info of what I entered in the pictures below.

Posted: October 22nd, 2009 | Author: khosrow | Tags: debian, linux | Comments Off on Deplyoing a Large Website Painlessly on Debian

We run a large scale and highly visible website. This site is updated frequently, and is very complex. So far the way the site is updated is using subversion where the latest code is checked out into the public servers – after much testing, of course.

A typical release goes something like this:

checkout code from subversionrun a few scripts to modify database and generate intermediate filesgenerate various connections between site and underlying softwareupdate underlying software

One problem with this approach is inevitably developers tend to push last minute fixes while in testing mode. It’s easy to update the code with a svn co but the code always tends to diverge and one fix usually leads to other bugs! Another issue is that each time a release is made a long list of complicated – and different each time – steps have to be followed. There are many other issues as well that I won’t go into right now, but suffice to say each release is as easy as pulling your own tooth!

So, one idea to cut down on all this trouble is to build a deb package for each release. This essentially locks down development, since each code change involves building a new package. I’m also fairly certain it will make life in the software lifecycle much easier.

And the debianized release would go like this:

apt-get install website packageapt-get install underlying software

Or even simpler if I made the website package depend on the underlying stuff:

apt-get install website package

Now only if I could get the decision makers to agree.